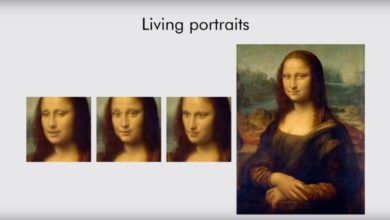

Earlier this year, researchers at the University of Southern California, in collaboration with Facebook’s Reality Labs and AI Research, showed off some pretty wild work in high-detailed “3D human digitization.”

To put it simply, they’ve created a process, using deep neural networks, that allows them to take 2D images of people and convert them into high-resolution 3D models. You can check out a brief overview in the video below, or there’s a longer five-minute version here.

While similar techniques already exist, they say this new approach “significantly outperforms” them.

“Due to memory limitations in current hardware, previous approaches tend to take low resolution images as input to cover large spatial context, and produce less precise (or low resolution) 3D estimates as a result. We address this limitation by formulating a multi-level architecture that is end-to-end trainable. A coarse level observes the whole image at lower resolution and focuses on holistic reasoning. This provides context to an fine level which estimates highly detailed geometry by observing higher-resolution images.”

You can read more about their project, titled “PIFuHD: Multi-Level Pixel-Aligned Implicit Function for High-Resolution 3D Human Digitization,” over on GitHub, or check out their study’s full paper.